Teleoperation of Reachy using Mediapipe and BlendArMocap

Using Mediapipe to estimate 3D pose and transfer motion onto a 7-DoF robot arm for teleoperation applications.

Explore the docs »

Report Bug

·

Request Feature

Table of contents

About the project

In the case of Reachy, teleoperation could involve using a computer or other control device to remotely move and manipulate the robotic arm. This could be useful in situations where a human operator is not able to physically be present to operate the robot, or when the task requires precision or speed that a human operator may not be able to achieve.

BlendArMocap is a Blender add-on to preform Hand, Face and Pose Detection using Googles Mediapipe. The detected data can be easily transferred to rigify rigs.

MediaPipe is a framework for building cross-platform multimodal applied machine learning pipelines. It can be used for tasks such as pose detection and pose transfer.

Pose detection is the process of detecting the posture or position of a person or object in an image or video. This can be useful for applications such as tracking human movement, recognizing gestures, and analyzing body language. MediaPipe provides tools and pre-built models for pose detection that can be used to build pose detection pipelines.

Pipeline

Mediapipe

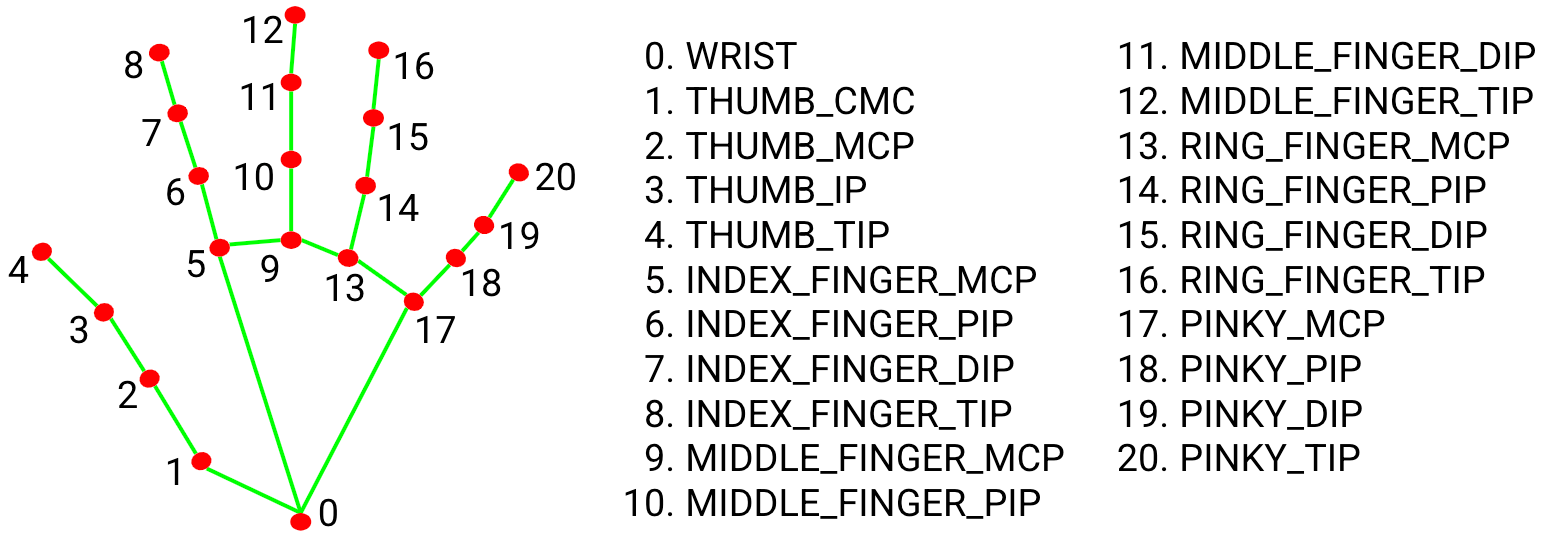

MediaPipe combines individual neural models for face mesh, human pose, and hands and has a total of 543 landmarks (33 pose landmarks, 468 face landmarks, and 21 hand landmarks per hand). All landmarks consist of $x$, $y$, and $z$ coordinates, except pose landmarks, which consist of a visibility coordinate along with $x$, $y$, and $z$ coordinates.

Hand Landmark Model

Results

Pose Detection using BlendARMocap

Teleoperation - Gripper